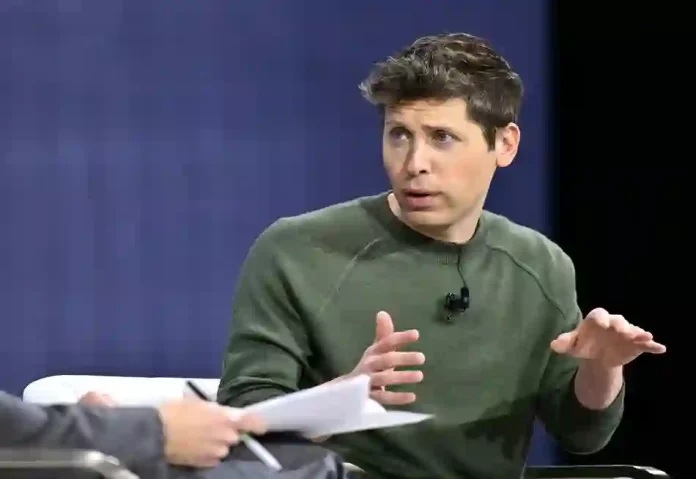

OpenAI CEO Sam Altman has sparked intense debate by suggesting artificial general intelligence may have already arrived quietly, challenging years of industry hype surrounding this supposed technological singularity. Speaking on the Big Technology Podcast, Altman proposed redefining the conversation entirely—claiming AGI “went whooshing by” without the world-transforming impact executives promised, urging focus instead on the next frontier of superintelligence. This provocative statement arrives amid growing skepticism about AI progress and shifting priorities among major laboratories.

AGI’s Elusive and Shifting Definition

Artificial general intelligence lacks consensus definition despite dominating tech discourse for decades. Traditionally understood as AI matching or exceeding human cognitive versatility across intellectual domains, AGI morphed into marketing buzzword deployed interchangeably with advanced narrow systems. Altman’s dismissal acknowledges this ambiguity, suggesting current large language models already satisfy loose interpretations without delivering prophesied economic revolutions or cognitive breakthroughs.

The executive’s superintelligence benchmark proves more concrete—systems outperforming humans as presidents, CEOs, or principal investigators even with AI assistance. This framing sidesteps philosophical debates around consciousness while establishing measurable leadership capability thresholds. Altman’s timeline acceleration mirrors his 2024 prediction of AGI within five years, though diminished societal expectations temper earlier doomsday scenarios and utopian promises.

Industry Leaders Grapple with Reality

Altman’s remarks echo broader recalibration across AI leadership. Microsoft CEO Satya Nadella recently emphasized pragmatic deployment over speculative milestones, prioritizing enterprise productivity gains. Google DeepMind’s Demis Hassabis warns society remains unprepared for AGI implications despite technical feasibility, while Microsoft AI chief Mustafa Suleyman confronts conscious AI risks alongside commitment to human-centric safeguards.

OpenAI’s trajectory reflects internal tensions between rapid productization and safety governance. Criticism mounts that commercialization pressures eclipse responsible development practices, particularly as Microsoft’s renewed partnership guarantees multi-billion-dollar compute access. Altman’s rhetoric strategically repositions OpenAI ahead of anticipated infrastructure constraints and regulatory scrutiny, framing iterative scaling as sufficient progress.

Technical Reality Behind the Hype

Current transformer-based architectures demonstrate remarkable pattern matching across language, code, and image domains, yet fundamental limitations persist. Systems excel at statistical prediction from internet-scale training data but struggle with genuine reasoning, causal inference, or novel scientific discovery absent extensive human-generated examples. Altman’s “whooshed by” claim acknowledges this ceiling—superhuman performance in narrow evaluation benchmarks coexists with persistent commonsense failures.

Scaling laws governing compute, data, and parameter growth show diminishing returns, challenging assumptions of inevitable emergence. Recent plateauing benchmark scores across major laboratories suggest architectural innovation now rivals brute-force training. OpenAI’s strategic pivot toward agentic systems and multimodal integration represents pragmatic evolution rather than paradigm shift, despite executive messaging.

Commercial and Economic Implications

AGI redefinition carries substantial financial consequences for publicly traded partners and venture-backed laboratories. Microsoft’s trillion-dollar AI infrastructure bet hinges less on existential breakthroughs than sustained enterprise adoption across Copilot ecosystem. OpenAI’s rumored 2026 IPO timing aligns with maturing revenue streams from API consumption and consumer applications rather than speculative intelligence milestones.

Investor expectations recalibrate toward predictable scaling economics. Enterprise customers prioritize reliability, cost predictability, and integration simplicity over theoretical cognitive capabilities. Altman’s measured rhetoric reassures stakeholders that trillion-parameter models deliver compounding value through incremental refinement rather than requiring discrete genius thresholds.

Societal Impact Assessment

Contrary to early predictions of mass unemployment or universal prosperity, AI deployment reveals gradual sectoral disruption. Creative professions face commoditization pressures while knowledge work gains unprecedented research acceleration. Economic concentration accelerates as frontier laboratories capture disproportionate value from public data sources, prompting regulatory responses around training corpora and algorithmic rents.

Workforce transformation proves uneven—routine cognitive tasks automate rapidly while human judgment, relationship management, and physical execution remain premium skills. Altman’s muted expectations align with empirical deployment patterns, where narrow automation compounds across applications rather than triggering phase transitions. Superintelligence discourse redirects attention toward governance frameworks capable of managing concentrated capability rather than awaiting discrete intelligence explosions.

Strategic Repositioning Ahead

Altman’s timeline compression serves multiple objectives. Domestically, it manages board and investor expectations amid compute bottlenecks and organizational turbulence. Internationally, measured rhetoric counters nation-state competition narratives while positioning OpenAI favorably for regulatory engagement. Technical leadership transitions toward engineering execution—optimizing inference efficiency, expanding multimodal capabilities, and deploying reliable agent architectures.

Competitive dynamics intensify as Anthropic, Google, and xAI pursue differentiated architectures challenging transformer hegemony. Diffusion models, state-space representations, and test-time compute optimization emerge as credible scaling alternatives. OpenAI maintains advantage through dataset scale and deployment infrastructure, though architectural lock-in risks obsolescence absent continuous innovation.

Future Trajectories and Uncertainties

Superintelligence pursuit demands unprecedented coordination across safety, capability, and deployment research. Microsoft’s independent AGI pathway preserves competitive tension while ensuring redundancy against single-laboratory failures. Regulatory harmonization across jurisdictions becomes pressing as capability thresholds approach leadership benchmarks, necessitating international standards for evaluation and containment.

Altman’s framework recasts AI evolution as continuous spectrum rather than discrete milestones. Capabilities compound through iterative refinement across evaluation suites, deployment contexts, and failure mode mitigation. Organizational maturity—spanning evaluation rigor, deployment safeguards, and incident response—emerges as binding constraint surpassing raw compute scaling. The industry transitions from speculative intelligence races toward engineering disciplined capability stewardship.