OpenAI's latest gpt-oss-20b model enables Mac users to run ChatGPT-style AI entirely offline—no subscription, no internet access, and no strings attached. Here's a streamlined guide to getting started.

On August 5, OpenAI released its first open-weight large language models in years, empowering Apple Silicon Mac users to run advanced AI tools without an internet connection or subscription. This breakthrough means many Macs can now handle sophisticated AI processing locally.

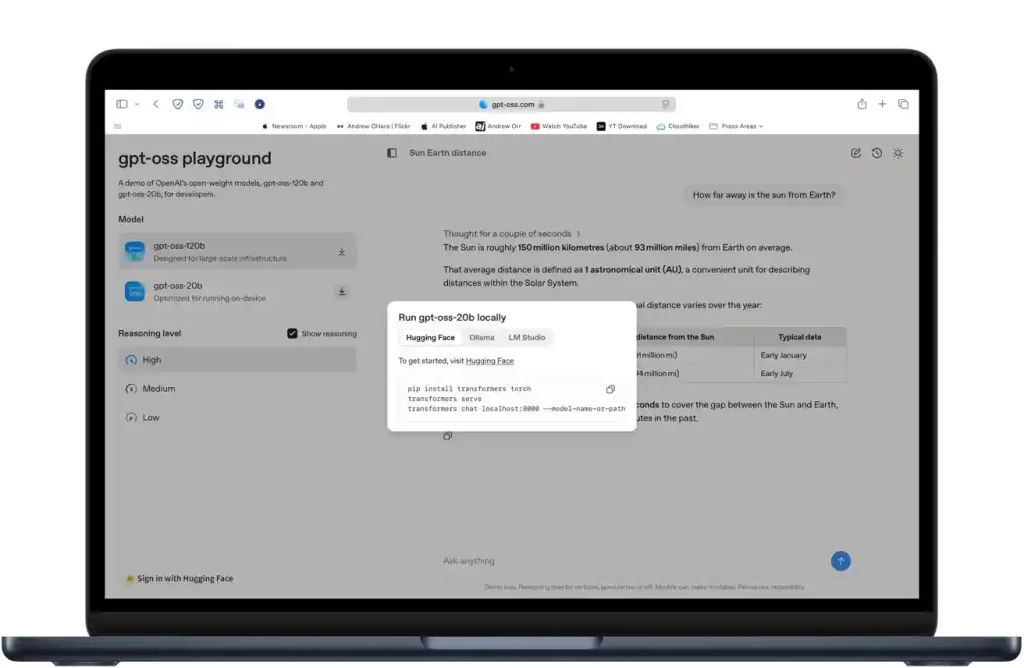

Previously, running powerful AI models required costly cloud services or complex server setups. OpenAI’s new gpt-oss-20b and gpt-oss-120b models have changed this with downloadable weights compatible with popular local AI platforms like LM Studio and Ollama.

You can even try the model in your browser first at gpt-oss.com, which offers free demos showcasing its writing, coding, and conversational abilities.

System Requirements for Smooth Performance

To run gpt-oss-20b effectively, an Apple Silicon Mac with at least an M2 chip and 16GB of RAM is recommended. For older M1 machines, the Max or Ultra variants are advised. Macs with extra cooling, such as Mac Studio, provide an ideal environment due to the demanding nature of AI processing.

On lighter machines like a MacBook Air with an M3 chip and 16GB RAM, the model runs but can heat up and respond slower—similar to running high-end games on Mac hardware.

Getting Started: Essential Tools

You’ll need one of these local AI management apps:

- LM Studio: A free app with a user-friendly graphical interface.

- Ollama: A command-line tool designed for easy management and running of AI models.

- MLX: Apple’s machine learning acceleration framework, integrated in both apps for enhanced performance.

These tools simplify downloading, setting up, and running the models without hassle.

Running the Model with Ollama

Here’s how to use Ollama to quickly launch gpt-oss-20b:

- Install Ollama from ollama.com following their setup instructions.

- Open Terminal and run: ollama run gpt-oss-20b

- Ollama will download the optimized 4-bit version (~12 GB) and take care of all setup steps.

- After loading, start chatting with the AI directly on your Mac, no internet required.

While slower than cloud GPT-4, responses come entirely offline, preserving privacy and avoiding network delays.

Performance and Limitations

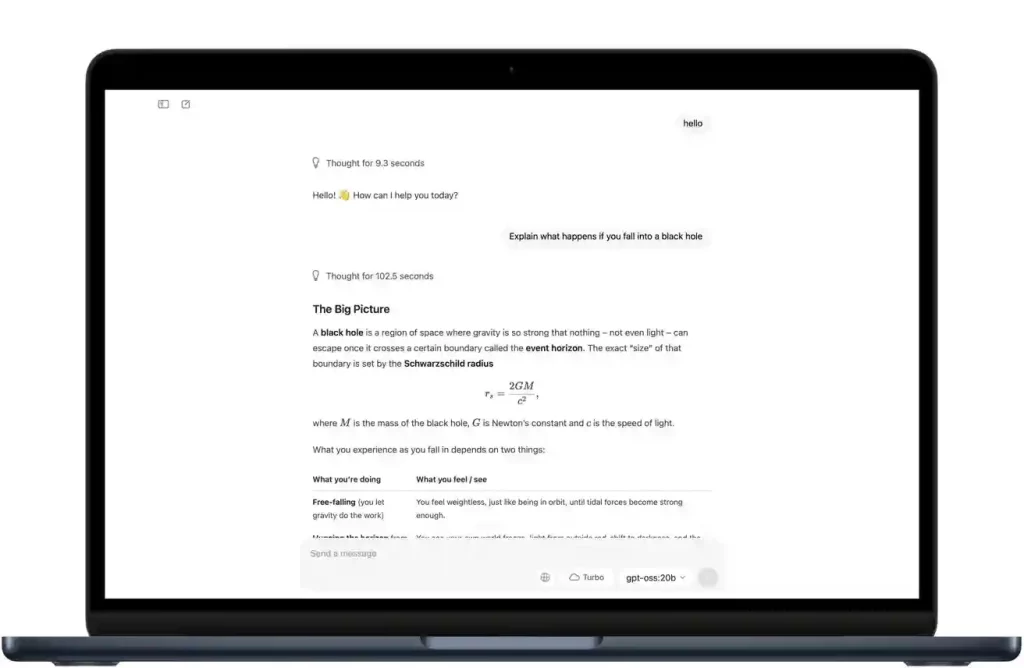

The 20-billion-parameter model is quantized to a 4-bit format, allowing it to run on Macs with 16GB RAM efficiently. It excels at:

- Writing and summarizing text

- Answering questions

- Generating and debugging code

- Executing structured function calls

Though it’s slower and less polished than cloud-based GPT-4, it is sufficiently responsive for personal use and development. The larger 120-billion-parameter model demands 60-80GB of RAM—feasible mostly on high-end workstations or research setups

Benefits of Running AI Locally

- Privacy: Your data never leaves your device.

- Cost: No subscription fees or API costs.

- Latency: Instant responses without network delays.

- Customization: Apache 2.0 license allows fine-tuning for bespoke workflows.

- Practical Considerations

The gpt-oss-20b strikes a balance between offline independence and usability. It’s reliable and free, though occasionally slower and requiring minor manual refinements on complex tasks. For casual writing, coding, and research offline, it’s a strong candidate.

If offline use is a priority, it’s among the best AI tools available for Macs today. However, for lightning-fast, highly accurate output, cloud-based AI remains the top choice.

Tips for Optimal Use

Use the quantized 4-bit MXFP4 version to dramatically reduce memory use while maintaining accuracy.

If your Mac has less than 16GB RAM, opt for smaller models (3–7 billion parameters).

Close memory-heavy applications before running sessions.

Enable MLX or Metal acceleration for speed improvements when possible.

With the right setup, your Mac can become a powerful, private AI workstation offline—no subscriptions, no internet, and full control over your data and AI experience. While not replacing high-end cloud models for every task, it’s a capable, secure option for many use cases.