Nvidia has unveiled what engineers call the most significant CUDA upgrade in nearly two decades, positioning the company even further ahead in the AI hardware race. The CUDA 13.1 release introduces revolutionary programming abstractions that make GPU development dramatically easier while locking developers deeper into Nvidia’s ecosystem. This software advancement transforms every existing Nvidia GPU into a more powerful AI accelerator overnight.

CUDA—the parallel computing platform powering Nvidia’s dominance—now features tile-based programming that abstracts away hardware complexities. Developers focus purely on algorithms while the system automatically optimizes execution across tensor cores and memory hierarchies. This shift echoes CEO Jensen Huang’s vision of making computing accessible through natural language rather than traditional coding.

Understanding CUDA’s Critical Role

CUDA serves as the essential software layer unlocking Nvidia GPUs’ massive parallel processing capabilities. While headlines focus on GPU shipments, CUDA delivers the real competitive moat through seamless integration with popular languages and frameworks. Developers leverage extensive libraries for AI training, scientific simulations, and real-time rendering without reinventing low-level optimizations.

The platform’s stickiness creates software-like economics, with Nvidia maintaining 67% gross margins over five years. Market dominance spans 70-95% of AI accelerators, making CUDA the de facto standard for modern machine learning workflows. New GPU launches automatically gain enhanced value through ecosystem improvements.

CUDA Tile Transforms Programming Paradigm

The headline innovation—CUDA Tile—introduces virtual instruction sets for tile-based parallel programming. Developers work with data “tiles” rather than manually orchestrating thousands of threads across GPU cores. The system intelligently distributes workloads for optimal tensor core utilization and memory access patterns.

Nvidia engineers Jonathan Bentz and Tony Scudiero emphasize writing algorithms at higher abstraction levels. Complex hardware details like cache hierarchies and execution pipelines become invisible, accelerating development cycles significantly. This approach scales effortlessly across Nvidia architectures from consumer RTX cards to enterprise Blackwell systems.

Key Technical Advancements

CUDA 13.1 delivers comprehensive performance and usability improvements.

– cuTile for Python enables data scientists to write high-performance GPU code without C++ expertise.

– Green contexts dynamically allocate GPU resources based on workload demands, maximizing efficiency.

– Enhanced Multi-Process Service prevents resource contention across concurrent applications.

– Blackwell GPUs achieve up to 4x faster grouped matrix multiplies critical for transformer models.

– Streamlined debugging tools reduce iteration times during model development.

These optimizations compound across Nvidia’s installed base, immediately boosting AI training throughput without hardware upgrades.

Developer Productivity Revolution

Python developers gain unprecedented GPU acceleration through cuTile APIs mirroring familiar NumPy and PyTorch patterns. Data tiles automatically map to optimal memory layouts, eliminating manual tuning common in previous workflows. Execution graphs compile just-in-time for specific hardware targets.

Green contexts represent intelligent power management, scaling clock speeds and memory bandwidth per application phase. Training workloads receive maximum resources while inference serves run leaner profiles. This granularity prevents resource starvation in multi-tenant data center environments.

Strategic Market Implications

The update arrives amid intensifying competition from AMD, Intel, and custom silicon developers. CUDA 13.1 widens the programming experience gap, making Nvidia migration increasingly painful for established teams. Enterprises with petabyte-scale training datasets face insurmountable porting costs.

Wall Street analysts highlight CUDA’s role in sustaining Nvidia’s premium valuations. Each software release extends hardware lifecycle value while preempting rival platform maturity. Blackwell’s 4x matrix multiply gains position next-generation clusters as irresistible upgrade paths for hyperscalers.

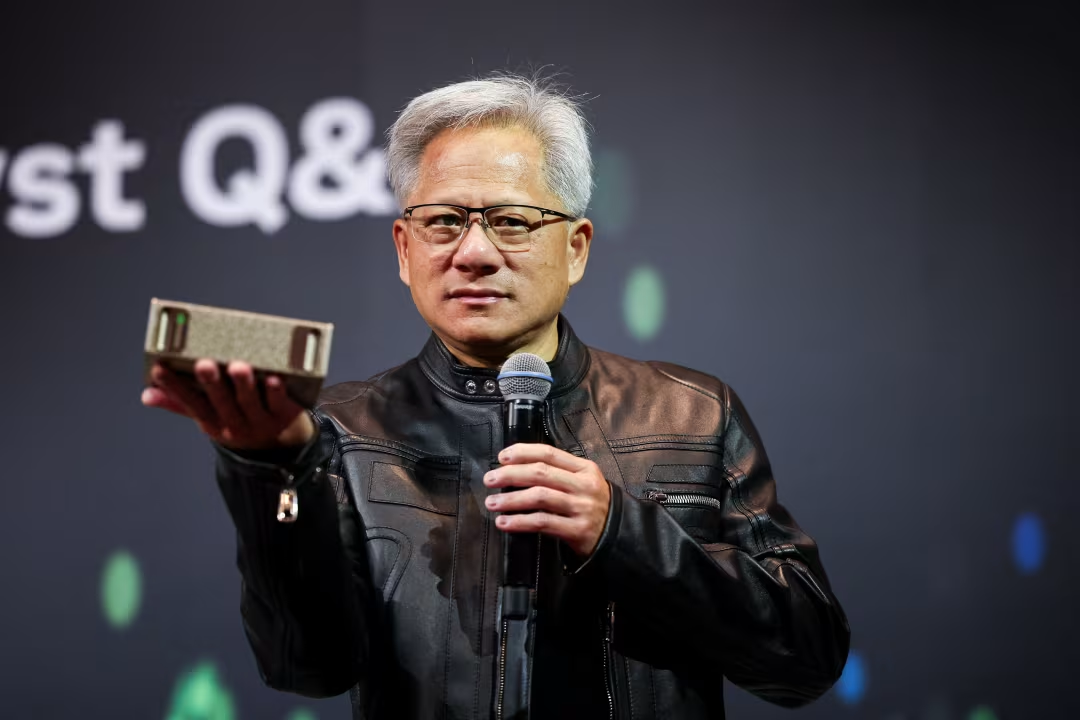

Enabling Jensen Huang’s Vision

CEO Jensen Huang’s prediction of programming’s “death” finds validation through CUDA 13.1’s abstractions. Developers express intent through high-level tile operations while AI compiles optimal machine code. This paradigm shift democratizes GPU acceleration beyond specialized teams.

Future iterations promise even tighter human-language integration, potentially generating tile programs from natural language specifications. The roadmap aligns with Huang’s goal of universal programming accessibility, transforming every domain expert into a GPU-accelerated innovator.

Immediate Performance Gains Across Ecosystem

Existing CUDA codebases gain automatic compatibility with tile optimizations through progressive adoption. Mixed workloads benefit from intelligent scheduling preventing interference between training, inference, and visualization pipelines. Data center operators achieve higher GPU utilization without architectural changes.

The update solidifies Nvidia’s multi-year lead in production AI infrastructure. Competitors must match this software velocity while simultaneously closing hardware gaps—an extraordinarily difficult dual-track challenge.